Practicals

|

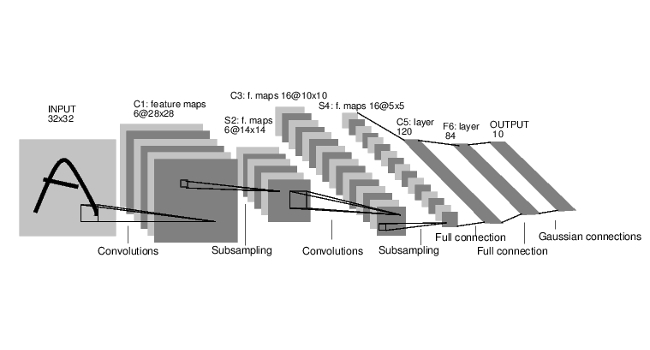

Deadline: November 15, 2020 In this assignment, you will learn how to implement and train basic neural architectures like MLPs and CNNs for classification tasks. Therefore, you will make use of modern deep learning libraries such as PyTorch which come with sophisticated functionalities like abstracted layer classes, automatic differentiation, optimizers, and more. Documents: |

|

|

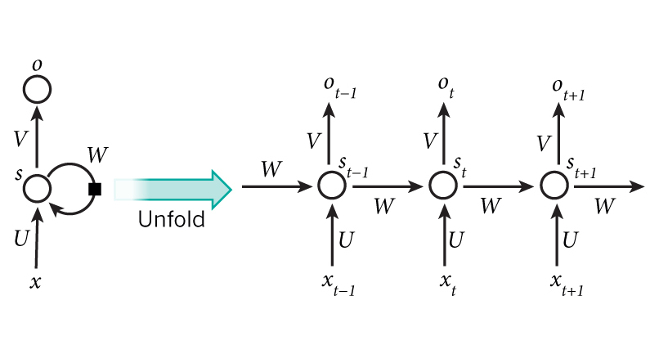

Deadline: December 1, 2020 In this assignment you will study and implement recurrent neural networks (RNNs) and have a theoretical introduction to graph neural networks (GNNs). RNNs are best suited for sequential processing of data, such as a sequence of characters, words or video frames. Their applications are mostly in neural machine translation, speech analysis and video understanding. GNNs are specifically applied to graph-structured data, like knowledge graphs, molecules or citation networks. Documents: |

|

|

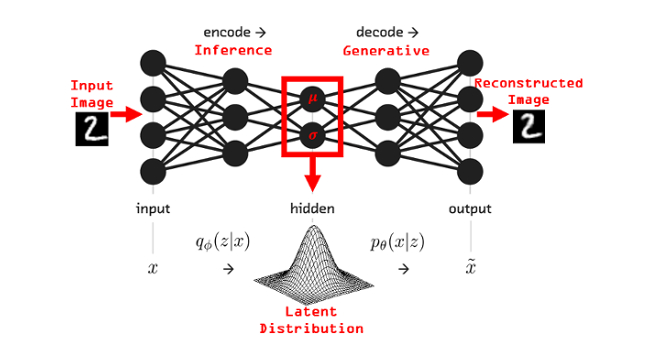

Deadline: December 20, 2020 Modelling distributions in high dimensional spaces is difficult. Simple distributions such as multivariate Gaussians or mixture models are not powerful enough to model complicated high-dimensional distributions. The question is: How can we design complicated distributions over high-dimensional data, such as images or audio? In this assignment, you will focus on three examples of well-known generative models: Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and Normalizing Flows (NFs). Documents: |

|